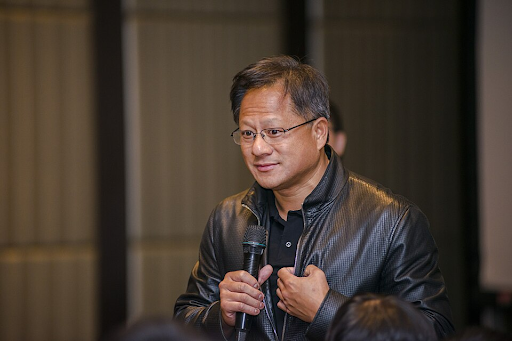

The scale of Nvidia’s ambition was on full display at CES as CEO Jensen Huang pulled back the curtain on the company’s future hardware infrastructure. The headline grabber was the concept of “pods”—massive clusters of the new Vera Rubin chips strung together to create supercomputing units. Huang revealed that a single pod could contain more than 1,000 chips, working in perfect unison.

This architecture is designed for the era of massive AI models. The Vera Rubin platform, arriving later this year, features a flagship server with 72 graphics units and 36 central processors. When connected into pods, these systems offer a level of parallel processing power that is hard to comprehend, designed to handle the exponential growth in AI data processing needs.

These pods are the factories of the future. They are built to generate “tokens”—the building blocks of AI responses—with ten times the efficiency of current systems. This efficiency is critical for scaling AI services to billions of users without causing an energy crisis.

The practical application of this power extends to Nvidia’s new automotive ventures. The intense reasoning capabilities of the Alpamayo software require the kind of low-latency, high-throughput computing that these pods facilitate in data centers, and that individual Rubin chips facilitate in vehicles.

By selling the pod architecture, Nvidia is defining the physical structure of the AI cloud. They are not just selling chips; they are selling the blueprints for the modern data center. This ensures that as the demand for AI grows, the infrastructure of the internet will increasingly look like an Nvidia design.